Optical flow

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene.[1][2] Optical flow can also be defined as the distribution of apparent velocities of movement of brightness pattern in an image.[3]

The concept of optical flow was introduced by the American psychologist James J. Gibson in the 1940s to describe the visual stimulus provided to animals moving through the world.[4] Gibson stressed the importance of optic flow for affordance perception, the ability to discern possibilities for action within the environment. Followers of Gibson and his ecological approach to psychology have further demonstrated the role of the optical flow stimulus for the perception of movement by the observer in the world; perception of the shape, distance and movement of objects in the world; and the control of locomotion.[5]

The term optical flow is also used by roboticists, encompassing related techniques from image processing and control of navigation including motion detection, object segmentation, time-to-contact information, focus of expansion calculations, luminance, motion compensated encoding, and stereo disparity measurement.[6][7]

Estimation

[edit]Optical flow can be estimated in a number of ways. Broadly, optical flow estimation approaches can be divided into machine learning based models (sometimes called data-driven models), classical models (sometimes called knowledge-driven models) which do not use machine learning and hybrid models which use aspects of both learning based models and classical models.[8]

Classical Models

[edit]Many classical models use the intuitive assumption of brightness constancy; that even if a point moves between frames, its brightness stays constant.[9] To formalise this intuitive assumption, consider two consecutive frames from a video sequence, with intensity , where refer to pixel coordinates and refers to time. In this case, the brightness constancy constraint is

where is the displacement vector between a point in the first frame and the corresponding point in the second frame. By itself, the brightness constancy constraint cannot be solved for and at each pixel, since there is only one equation and two unknowns. This is known as the aperture problem. Therefore, additional constraints must be imposed to estimate the flow field.[10][11]

Regularized Models

[edit]Perhaps the most natural approach to addressing the aperture problem is to apply a smoothness constraint or a regularization constraint to the flow field. One can combine both of these constraints to formulate estimating optical flow as an optimization problem, where the goal is to minimize the cost function of the form,

where is the extent of the images , is the gradient operator, is a constant, and is a loss function. [9][10]

This optimisation problem is difficult to solve owing to its non-linearity. To address this issue, one can use a variational approach and linearise the brightness constancy constraint using a first order Taylor series approximation. Specifically, the brightness constancy constraint is approximated as,

For convenience, the derivatives of the image, , and are often condensed to become , and . Doing so, allows one to rewrite the linearised brightness constancy constraint as,[11]

The optimization problem can now be rewritten as

For the choice of , this method is the same as the Horn-Schunck method. [12] Of course, other choices of cost function have been used such as , which is a differentiable variant of the norm.[9] [13]

To solve the aforementioned optimization problem, one can use the Euler-Lagrange equations to provide a system of partial differential equations for each point in . In the simplest case of using , these equations are,

where denotes the Laplace operator. Since the image data is made up of discrete pixels, these equations are discretised. Doing so yields a system of linear equations which can be solved for at each pixel, using an iterative scheme such as Gauss-Seidel.[12]

An alternate approach is to discretize the optimisation problem and then perform a search of the possible values without linearising it.[14] This search is often performed using Max-flow min-cut theorem algorithms, linear programming or belief propagation methods.

Parametric Models

[edit]Instead of applying the regularization constraint on a point by point basis as per a regularized model, one can group pixels into regions and estimate the motion of these regions. This is known as a parametric model, since the motion of these regions is parameterized. In formulating optical flow estimation in this way, one makes the assumption that the motion field in each region be fully characterised by a set of parameters. Therefore, the goal of a parametric model is to estimate the motion parameters that minimise a loss function which can be written as,

where is the set of parameters determining the motion in the region , is data cost term, is a weighting function that determines the influence of pixel on the total cost, and and are frames 1 and 2 from a pair of consecutive frames. [9]

The simplest parametric model is the Lucas-Kanade method. This uses rectangular regions and parameterises the motion as purely translational. The Lucas-Kanade method uses the original brightness constancy constrain as the data cost term and selects . This yields the local loss function,

Other possible local loss functions include the negative normalized cross-correlation between the two frames.[15]

Learning Based Models

[edit]These models Instead of seeking to model optical flow directly, one can train a machine learning system to estimate optical flow. Since 2015, when FlowNet[16] was proposed, learning based models have been applied to optical flow and have gained prominence. Initially, these approaches were based on Convolutional Neural Networks arranged in a U-Net architecture. However, with the advent of transformer architecture in 2017, transformer based models have gained prominence.[17]

Uses

[edit]Motion estimation and video compression have developed as a major aspect of optical flow research. While the optical flow field is superficially similar to a dense motion field derived from the techniques of motion estimation, optical flow is the study of not only the determination of the optical flow field itself, but also of its use in estimating the three-dimensional nature and structure of the scene, as well as the 3D motion of objects and the observer relative to the scene, most of them using the image Jacobian.[18]

Optical flow was used by robotics researchers in many areas such as: object detection and tracking, image dominant plane extraction, movement detection, robot navigation and visual odometry.[6] Optical flow information has been recognized as being useful for controlling micro air vehicles.[19]

The application of optical flow includes the problem of inferring not only the motion of the observer and objects in the scene, but also the structure of objects and the environment. Since awareness of motion and the generation of mental maps of the structure of our environment are critical components of animal (and human) vision, the conversion of this innate ability to a computer capability is similarly crucial in the field of machine vision.[20]

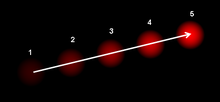

Consider a five-frame clip of a ball moving from the bottom left of a field of vision, to the top right. Motion estimation techniques can determine that on a two dimensional plane the ball is moving up and to the right and vectors describing this motion can be extracted from the sequence of frames. For the purposes of video compression (e.g., MPEG), the sequence is now described as well as it needs to be. However, in the field of machine vision, the question of whether the ball is moving to the right or if the observer is moving to the left is unknowable yet critical information. Not even if a static, patterned background were present in the five frames, could we confidently state that the ball was moving to the right, because the pattern might have an infinite distance to the observer.

Optical flow sensor

[edit]Various configurations of optical flow sensors exist. One configuration is an image sensor chip connected to a processor programmed to run an optical flow algorithm. Another configuration uses a vision chip, which is an integrated circuit having both the image sensor and the processor on the same die, allowing for a compact implementation.[21][22] An example of this is a generic optical mouse sensor used in an optical mouse. In some cases the processing circuitry may be implemented using analog or mixed-signal circuits to enable fast optical flow computation using minimal current consumption.

One area of contemporary research is the use of neuromorphic engineering techniques to implement circuits that respond to optical flow, and thus may be appropriate for use in an optical flow sensor.[23] Such circuits may draw inspiration from biological neural circuitry that similarly responds to optical flow.

Optical flow sensors are used extensively in computer optical mice, as the main sensing component for measuring the motion of the mouse across a surface.

Optical flow sensors are also being used in robotics applications, primarily where there is a need to measure visual motion or relative motion between the robot and other objects in the vicinity of the robot. The use of optical flow sensors in unmanned aerial vehicles (UAVs), for stability and obstacle avoidance, is also an area of current research.[24]

See also

[edit]- Ambient optic array

- Optical mouse

- Range imaging

- Vision processing unit

- Continuity Equation

- Motion field

References

[edit]- ^ Burton, Andrew; Radford, John (1978). Thinking in Perspective: Critical Essays in the Study of Thought Processes. Routledge. ISBN 978-0-416-85840-2.

- ^ Warren, David H.; Strelow, Edward R. (1985). Electronic Spatial Sensing for the Blind: Contributions from Perception. Springer. ISBN 978-90-247-2689-9.

- ^ Horn, Berthold K.P.; Schunck, Brian G. (August 1981). "Determining optical flow" (PDF). Artificial Intelligence. 17 (1–3): 185–203. doi:10.1016/0004-3702(81)90024-2. hdl:1721.1/6337.

- ^ Gibson, J.J. (1950). The Perception of the Visual World. Houghton Mifflin.

- ^ Royden, C. S.; Moore, K. D. (2012). "Use of speed cues in the detection of moving objects by moving observers". Vision Research. 59: 17–24. doi:10.1016/j.visres.2012.02.006. PMID 22406544. S2CID 52847487.

- ^ a b Aires, Kelson R. T.; Santana, Andre M.; Medeiros, Adelardo A. D. (2008). Optical Flow Using Color Information (PDF). ACM New York, NY, USA. ISBN 978-1-59593-753-7.

- ^ Beauchemin, S. S.; Barron, J. L. (1995). "The computation of optical flow". ACM Computing Surveys. 27 (3). ACM New York, USA: 433–466. doi:10.1145/212094.212141. S2CID 1334552.

- ^ Zhai, Mingliang; Xiang, Xuezhi; Lv, Ning; Kong, Xiangdong (2021). "Optical flow and scene flow estimation: A survey". Pattern Recognition. 114: 107861. doi:10.1016/j.patcog.2021.107861.

- ^ a b c d Fortun, Denis; Bouthemy, Patrick; Kervrann, Charles (2015-05-01). "Optical flow modeling and computation: A survey". Computer Vision and Image Understanding. 134: 1–21. doi:10.1016/j.cviu.2015.02.008. Retrieved 2024-12-23.

- ^ a b Brox, Thomas; Bruhn, Andrés; Papenberg, Nils; Weickert, Joachim (2004). "High Accuracy Optical Flow Estimation Based on a Theory for Warping". Computer Vision - ECCV 2004. ECCV 2004. Berlin, Heidelberg: Springer Berlin Heidelberg. pp. 25–36.

- ^ a b Baker, Simon; Scharstein, Daniel; Lewis, J. P.; Roth, Stefan; Black, Michael J.; Szeliski, Richard (1 March 2011). "A Database and Evaluation Methodology for Optical Flow". International Journal of Computer Vision. 92 (1): 1–31. doi:10.1007/s11263-010-0390-2. ISSN 1573-1405. Retrieved 25 Dec 2024.

- ^ a b Cite error: The named reference

Horn_1980was invoked but never defined (see the help page). - ^ Sun, Deqing; Roth, Stefan; Black, "Micahel J." (2010). "Secrets of optical flow estimation and their principles". 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition. San Francisco, CA, USA: IEEE. pp. 2432–2439.

- ^ Steinbr¨ucker, Frank; Pock, Thomas; Cremers, Daniel; Weickert, Joachim (2009). "Large Displacement Optical Flow Computation without Warping". 2009 IEEE 12th International Conference on Computer Vision. 2009 IEEE 12th International Conference on Computer Vision. IEEE. pp. 1609–1614.

- ^ Lucas, Bruce D.; Kanade, Takeo (1981-08-24). An iterative image registration technique with an application to stereo vision. Proceedings of the 7th International Joint Conference on Artificial intelligence - Volume 2. IJCAI'81. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc. pp. 674–679.

- ^ Dosovitskiy, Alexey; Fischer, Philipp; Ilg, Eddy; Hausser, Philip; Hazirbas, Caner; Golkov, Vladimir; Smagt, Patrick van der; Cremers, Daniel; Brox, Thomas (2015). FlowNet: Learning Optical Flow with Convolutional Networks. 2015 IEEE International Conference on Computer Vision (ICCV). IEEE. pp. 2758–2766. doi:10.1109/ICCV.2015.316. ISBN 978-1-4673-8391-2.

- ^ Alfarano, Andrea; Maiano, Luca; Papa, Lorenzo; Amerini, Irene (2024). "Estimating optical flow: A comprehensive review of the state of the art". Computer Vision and Image Understanding. 249: 104160. doi:10.1016/j.cviu.2024.104160.

- ^ Corke, Peter (8 May 2017). "The Image Jacobian". QUT Robot Academy.

- ^ Barrows, G. L.; Chahl, J. S.; Srinivasan, M. V. (2003). "Biologically inspired visual sensing and flight control". Aeronautical Journal. 107 (1069): 159–268. doi:10.1017/S0001924000011891. S2CID 108782688 – via Cambridge University Press.

- ^ Brown, Christopher M. (1987). Advances in Computer Vision. Lawrence Erlbaum Associates. ISBN 978-0-89859-648-9.

- ^ Moini, Alireza (2000). Vision Chips. Boston, MA: Springer US. ISBN 9781461552673. OCLC 851803922.

- ^ Mead, Carver (1989). Analog VLSI and neural systems. Reading, Mass.: Addison-Wesley. ISBN 0201059924. OCLC 17954003.

- ^ Stocker, Alan A. (2006). Analog VLSI circuits for the perception of visual motion. Chichester, England: John Wiley & Sons. ISBN 0470034882. OCLC 71521689.

- ^ Floreano, Dario; Zufferey, Jean-Christophe; Srinivasan, Mandyam V.; Ellington, Charlie, eds. (2009). Flying insects and robots. Heidelberg: Springer. ISBN 9783540893936. OCLC 495477442.

External links

[edit]- Finding Optic Flow

- Art of Optical Flow article on fxguide.com (using optical flow in visual effects)

- Optical flow evaluation and ground truth sequences.

- Middlebury Optical flow evaluation and ground truth sequences.

- mrf-registration.net - Optical flow estimation through MRF

- The French Aerospace Lab: GPU implementation of a Lucas-Kanade based optical flow

- CUDA Implementation by CUVI (CUDA Vision & Imaging Library)

- Horn and Schunck Optical Flow: Online demo and source code of the Horn and Schunck method

- TV-L1 Optical Flow: Online demo and source code of the Zach et al. method

- Robust Optical Flow: Online demo and source code of the Brox et al. method